Luis QuinteroAssociate Senior Lecturer

About me

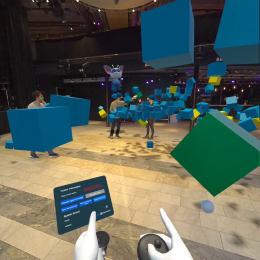

I am part of the Data Science and STIR groups at DSV, Stockholm University. My research interests intersect emergent technologies (extended reality, XR) and applied data science and AI for behavioral user modeling.

My projects explore these research questions:

- how co-located mixed reality can support novel interactions for public artistic performances?

- how to create novel interactions interactive immersive systems for industrial applications and accessibility?

- how to integrate AI-supported decision-making tools into practice with explainable user interfaces?

- how to design personalized adaptive environments in digital eyewear from real-time body monitoring?

More information on my personal website.

Teaching

I have participated in the following courses:

- Data Science for Health Informatics and Design

- Data Mining

- Design for Emerging Technologies

- Design for Complex and Dynamic Contexts

- Project Management for Health Informatics

Research projects

Publications

A selection from Stockholm University publication database

-

AVDOS-VR: Affective Video Database with Physiological Signals and Continuous Ratings Collected Remotely in VR

2024. Michal Gnacek (et al.). Scientific Data 11

ArticleRead more about AVDOS-VRInvestigating emotions relies on pre-validated stimuli to evaluate induced responses through subjective self-ratings and physiological changes. The creation of precise affect models necessitates extensive datasets. While datasets related to pictures, words, and sounds are abundant, those associated with videos are comparatively scarce. To overcome this challenge, we present the first virtual reality (VR) database with continuous self-ratings and physiological measures, including facial EMG. Videos were rated online using a head-mounted VR device (HMD) with attached emteqPRO mask and a cinema VR environment in remote home and laboratory settings with minimal setup requirements. This led to an affective video database with continuous valence and arousal self-rating measures and physiological responses (PPG, facial-EMG (7x), IMU). The AVDOS-VR database includes data from 37 participants who watched 30 randomly ordered videos (10 positive, neutral, and negative). Each 30-second video was assessed with two-minute relaxation between categories. Validation results suggest that remote data collection is ecologically valid, providing an effective strategy for future affective study designs. All data can be accessed via: www.gnacek.com/affective-video-database-online-study.

-

Codeseum: Learning Introductory Programming Concepts through Virtual Reality Puzzles

2024. Johan Ekman, Jordi Solsona Belenguer, Luis Eduardo Velez Quintero. Proceedings of the 2024 ACM International Conference on Interactive Media Experiences, 192-200

ConferenceRead more about CodeseumWhen learning programming concepts, beginners face challenges that lead to decreased motivation, Game-Based Learning (GBL) uses game principles to make learning more engaging, and VR has been explored as a way to enhance GBL further. This paper explores the impact of Virtual Reality (VR) on learning programming, and we developed Codeseum to compare whether VR-based learning is perceived as more engaging and usable than a desktop game counterpart. The experiment with ten participants included data from questionnaires, interviews, and structured observations. The quantitative analysis indicated that VR was perceived as inducing higher focused attention, aesthetic appeal, and reward, while the thematic analysis provided discussion elements of seven themes, including interaction, engagement, and physical expressions from the participants. Overall, the desktop application had better accessibility, whereas the immersive interactions from Codeseum in VR induced higher levels of enjoyment and engagement. Our study contributes insights into the potential of VR in education, mainly teaching coding skills in engaging ways, and ofers information to adopt immersive technologies in teaching practice.

-

Envisioning Ubiquitous Biosignal Interaction with Multimedia

2024. Ekaterina Stepanova (et al.). MUM '24, 495-500

ConferenceRead more about Envisioning Ubiquitous Biosignal Interaction with MultimediaBiosensing technologies are on their way to becoming ubiquitous in multimedia interaction. These technologies capture physiological data, such as heart rate, breathing, skin conductance, and brain activity. Researchers are exploring biosensing from perspectives including engineering, design, medicine, mental health, consumer products, and interactive art. These technologies can enhance our interactions, allowing us to connect with our bodies and others around us across diverse application areas. However, the integration of biosignals in HCI presents new challenges pertaining to choosing what data we capture, interpreting these data, its representation, application areas, and ethics. There is a need to synthesize knowledge across diverse perspectives of researchers and designers spanning multiple domains and to map a landscape of the challenges and opportunities of this research area. The goal of this workshop is to exchange knowledge in the research community, introduce novices to this emerging field, and build a future research agenda.

-

Effects of Third-Person Locomotion Techniques on Sense of Embodiment in Virtual Reality

2024. Johanna Ulrichs, Andrii Matviienko, Luis Eduardo Velez Quintero. MUM '24, 72-81

ConferenceRead more about Effects of Third-Person Locomotion Techniques on Sense of Embodiment in Virtual RealityVirtual Reality (VR) has enabled novel ways to study embodiment and understand how a virtual avatar may be treated as part of a person's body. These studies mainly employ virtual bodies perceived from a first-person perspective, given that VR has a default egocentric view. Third-person perspective (3PP) within VR has positively influenced the navigation time and spatial orientation in large virtual worlds. However, the relationship between VR locomotion in 3PP and the sense of embodiment in the users remains unexplored. In this paper, we proposed three VR locomotion techniques in 3PP (controller joystick, head tilt, arm swing). We evaluated them in a user study (N=16) focusing on their influence on the sense of embodiment, perceived usability, VR sickness, and completion time. Our results showed that arm swing and head tilt facilitate higher embodiment than a controller joystick but lead to higher completion times and oculomotor sickness.

-

Comparing Early-Stage Symptoms of Spatial Disorientation Between Virtual Reality Navigation and Paper-Based MoCA Test

2024. Maria Dodieva, Luis Eduardo Velez Quintero. MUM '24, 445-447

ConferenceRead more about Comparing Early-Stage Symptoms of Spatial Disorientation Between Virtual Reality Navigation and Paper-Based MoCA TestSpatial disorientation is an early symptom of Alzheimer's Disease (AD), commonly diagnosed through paper-based tests like MoCA. There is a growing need for accessible technological solutions for early-stage AD diagnosis. Few studies have compared spatial orientation in Virtual Reality (VR) environments with standardized tests. This paper contributes with a VR-based navigation task for early-stage diagnosis of spatial disorientation and an empirical evaluation (N=20) comparing spatial navigation with the MoCA scores. Results suggest that allocentric spatial orientation decreases as the VR task becomes harder and that navigation time was higher in participants who failed the paper-based test. Preliminary findings suggest VR could be effective for preventive screening and early AD symptom identification related to allocentric orientation.

-

Personalized Feature Importance Ranking for Affect Recognition From Behavioral and Physiological Data

2023. Luis Eduardo Velez Quintero, Uno Fors, Panagiotis Papapetrou. IEEE Transactions on Games (TG)

ArticleRead more about Personalized Feature Importance Ranking for Affect Recognition From Behavioral and Physiological DataDesigning affect-based personalized technology involves dealing with large datasets. Machine learning (ML) algorithms are employed to predict affect and similar human factors from in-game metrics, behavioral patterns, or physiological responses. The classification performance is usually presented as a global point estimate without providing user-specific interpretations. This approach is incompatible with effective personalization in games because it disregards the variability of body responses between players. This paper proposes a methodology to classify subjective human factors from large multimodal data. A public VR dataset (CEAP-360VR) was used to extensively compare three ML classifiers and five feature importance techniques. The produced models could reduce the original feature space by 82% (from 113 to 20 features) without compromising predictive performance (F1 score). A random forest (RF) using forward sequential feature selection (fSFS) yielded the best prediction of binary valence (F1=0.761) and arousal (F1=0.748). Finally, feature importance rankings are discussed with emphasis on global and user-specific patterns that may improve affect recognition. The proposed methodology is envisioned to help game designers and researchers create customized user-centric games and VR experiences inferring possible explanations from multimodal datasets.

-

User Modeling for Adaptive Virtual Reality Experiences: Personalization from Behavioral and Physiological Time Series

2023. Luis Quintero.

Thesis (Doc)Read more about User Modeling for Adaptive Virtual Reality ExperiencesResearch in human-computer interaction (HCI) has focused on designing technological systems that serve a beneficial purpose, offer intuitive interfaces, and adapt to a person's expectations, goals, and abilities. Nearly all digital services available in our daily lives have personalization capabilities, mainly due to the ubiquity of mobile devices and the progress that has been made in machine learning (ML) algorithms. Web, desktop, and smartphone applications inherently gather metrics from the system and users' activity to improve the attractiveness of their products and services. Meanwhile, the hardware, input interfaces, and algorithms currently under development guide the designs of upcoming interactive systems that may become pervasive in society, such as immersive virtual reality (VR) or physiological wearable sensing systems. These technological advancements have led to multiple questions regarding the personalization capabilities of modern visualization mediums and fine-grained body measurements. How does immersive VR enable new pathways for understanding the context in which a user interacts with a system? Can the user's behavioral and physiological data improve the accuracy of ML models estimating human factors? What are the challenges and risks of designing personalized systems that transcend current setups with a 2D-based display, touchscreen, keyboard, and mouse? This thesis provides insights into how human behavior and body responses can be incorporated into immersive VR applications to enable personalized adaptations in 3D virtual environments. The papers contribute frameworks and algorithms that harness multimodal time-series data and state-of-the-art ML classifiers in user-centered VR applications. The multimodal data include motion trajectories and body measurements from the user's brain and heart, which are used to capture responses elicited by virtual experiences. The ML algorithms exploit the temporality of large datasets to perform automatic data analysis and provide interpretable explanations about signals that correlate with the user's skill level or emotional states. Ultimately, this thesis provides an outlook on how the combination of recent hardware and algorithms may unlock unprecedented opportunities to create 3D experiences tailored to each user and to help them attain specific goals with VR-based systems, framed using the overarching topic of context-aware systems and discussing the ethical risks related to personalization based on behavioral and physiological time-series data in immersive VR experiences.

-

CS:NO - an Extended Reality Experience for Cyber Security Education

2022. Melina Bernsland (et al.). IMX 2022 - Proceedings of the 2022 ACM International Conference on Interactive Media Experiences, 287-292

ConferenceRead more about CS:NO - an Extended Reality Experience for Cyber Security EducationThis work-in-progress presents the design of an XR prototype for the purpose of educating basic cybersecurity concepts. We have designed an experimental virtual reality cyberspace to visualise data traffic over network, enabling the user to interact with VR representations of data packets. Our objective was to help the user better conceptualise abstract cybersecurity topics such as encryption and decryption, firewall and malicious data. Additionally, to better stimuli the sense of immersion we have used Peltier thermoelectric modules and Arduino Uno to experiment with multisensory XR. Furthermore, we reflect on early evaluation of this experimental prototype and present potential paths for future improvements.

-

Excite-O-Meter: an Open-Source Unity Plugin to Analyze Heart Activity and Movement Trajectories in Custom VR Environments

2022. Luis Quintero (et al.). 2022 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), 46-47

ConferenceRead more about Excite-O-MeterThis article explains the new features of the Excite-O-Meter, an open-source tool that enables the collection of bodily data, real-time feature extraction, and post-session data visualization in any custom VR environment developed in Unity. Besides analyzing heart activity, the tool supports now multidimensional time series to study motion trajectories in VR. The paper presents the main functionalities and discusses the relevance of the tool for behavioral and psychophysiological research.

-

Effective Classification of Head Motion Trajectories in Virtual Reality using Time-Series Methods

2021. Luis Eduardo Velez Quintero (et al.). 2021 IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR), 38-46

ConferenceRead more about Effective Classification of Head Motion Trajectories in Virtual Reality using Time-Series MethodsIn this paper, we present a method to classify head motion trajectories using recent time-series methods. The analysis of motion data with machine learning is a common technique to solve problems in Virtual Reality (VR), such as adaptive rendering or user behavioral modeling. Motion data are initially collected as time series, but they are usually transformed into tabular features compatible with traditional feature-based classifiers. Data mining research has proposed several time-series classifiers that can directly exploit the temporal relationship of the data without requiring manual feature extraction. Nevertheless, the effectiveness of these time-series methods still requires validation on real-life problem domains. Therefore, this paper demonstrates how a pipeline that combines a recent time-series classifier with two rotation space representations (quaternion and Euler) can successfully analyze head motion in VR applications. We test the proposed method on two public datasets containing head rotations, resulting in higher prediction accuracy than other feature-based and time-series classifiers. We also discuss some limitations, guidelines for future work, and concluding remarks.

-

Excite-O-Meter: Software Framework to Integrate Heart Activity in Virtual Reality

2021. Luis Eduardo Velez Quintero (et al.). 2021 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), 357-366

ConferenceRead more about Excite-O-MeterBodily signals can complement subjective and behavioral measures to analyze human factors, such as user engagement or stress, when interacting with virtual reality (VR) environments. Enabling widespread use of (also the real-time analysis) of bodily signals in VR applications could be a powerful method to design more user-centric, personalized VR experiences. However, technical and scientific challenges (e.g., cost of research-grade sensing devices, required coding skills, expert knowledge needed to interpret the data) complicate the integration of bodily data in existing interactive applications. This paper presents the design, development, and evaluation of an open-source software framework named Excite-O-Meter. It allows existing VR applications to integrate, record, analyze, and visualize bodily signals from wearable sensors, with the example of cardiac activity (heart rate and its variability) from the chest strap Polar H10. Survey responses from 58 potential users determined the design requirements for the framework. Two tests evaluated the framework and setup in terms of data acquisition/analysis and data quality. Finally, we present an example experiment that shows how our tool can be an easy-to-use and scientifically validated tool for researchers, hobbyists, or game designers to integrate bodily signals in VR applications.

-

Taxonomy of Physiologically Adaptive Systems and Design Framework

2021. John E. Muñoz (et al.). International Conference on Human-Computer Interaction, 559-576

ConferenceRead more about Taxonomy of Physiologically Adaptive Systems and Design FrameworkThe design of physiologically adaptive systems entails several complex steps from acquiring human body signals to create responsive adaptive behaviors that can be used to enhance conventional communication pathways between human and technological systems. Categorizing and classifying the computing techniques used to create intelligent adaptation via physiological metrics is an important step towards creating a body of knowledge that allows the field to develop and mature accordingly. This paper proposes the creation of a taxonomy that groups several physiologically adaptive (also called biocybernetic) systems that have been previously designed and reported. The taxonomy proposes two subcategories of adaptive techniques: control theoretics and machine learning, which have multiple sub-categories that we illustrate with systems created in the last decades. Based on the proposed taxonomy, we also propose a design framework that considers four fundamental aspects that should be defined when designing physiologically adaptive systems: the medium, the application area, the psychophysiological target state, and the adaptation technique. We conclude the paper by discussing the importance of the proposed taxonomy and design framework as well as suggesting research areas and applications where we envision biocybernetic systems will evolve in the following years.

-

Understanding research methodologies when combining virtual reality technology with machine learning techniques

2020. Luis Quintero. PETRA '20, 1-4

ConferenceRead more about Understanding research methodologies when combining virtual reality technology with machine learning techniquesVirtual Reality (VR) technology represents a new medium to provide immersive solutions in different fields. The analysis of a user while interacting in VR, through data science and machine learning (ML) techniques, might provide insights to deliver customized functionalities that enhance productivity and efficiency in learning tasks in education or rehabilitation processes in healthcare. However, empirical research involving VR often borrows methods from human-computer interaction intending to evaluate human behavior through technology, whereas ML intend to create mathematical models, usually with non-empirical approach. Their opposite nature might cause confusion for early-stage researchers wanting to understand and follow the methodological approaches and communicative practices in empirical studies that merge both VR and ML. This paper presents a scoping review of methodological strategies undertaken in 21 peer-reviewed research articles that involve both VR and ML. Results show and appraise different methodological approaches in research projects, and outline a set of recommendations to combine metrics from inferential statistics and evaluation of ML models to increase validity, reliability and trustworthiness in future research projects that intersect VR and ML.

-

A Psychophysiological Model of Firearms Training in Police Officers

2020. John E. Muñoz (et al.). Frontiers in Psychology 11

ArticleRead more about A Psychophysiological Model of Firearms Training in Police OfficersCrucial elements for police firearms training include mastering very specific psychophysiological responses associated with controlled breathing while shooting. Under high-stress situations, the shooter is affected by responses of the sympathetic nervous system that can impact respiration. This research focuses on how frontal oscillatory brainwaves and cardiovascular responses of trained police officers (N = 10) are affected during a virtual reality (VR) firearms training routine. We present data from an experimental study wherein shooters were interacting in a VR-based training simulator designed to elicit psychophysiological changes under easy, moderate and frustrating difficulties. Outcome measures in this experiment include electroencephalographic and heart rate variability (HRV) parameters, as well as performance metrics from the VR simulator. Results revealed that specific frontal areas of the brain elicited different responses during resting states when compared with active shooting in the VR simulator. Moreover, sympathetic signatures were found in the HRV parameters (both time and frequency) reflecting similar differences. Based on the experimental findings, we propose a psychophysiological model to aid the design of a biocybernetic adaptation layer that creates real-time modulations in simulation difficulty based on targeted physiological responses.

-

Implementation of Mobile-Based Real-Time Heart Rate Variability Detection for Personalized Healthcare

2019. Luis Quintero (et al.). 2019 International Conference on Data Mining Workshops (ICDMW)

ConferenceRead more about Implementation of Mobile-Based Real-Time Heart Rate Variability Detection for Personalized HealthcareThe ubiquity of wearable devices together with areas like internet of things, big data and machine learning have promoted the development of solutions for personalized healthcare that use digital sensors. However, there is a lack of an implemented framework that is technically feasible, easily scalable and that provides meaningful variables to be used in applications for translational medicine. This paper describes the implementation and early evaluation of a physiological sensing tool that collects and processes photoplethysmography data from a wearable smartwatch to calculate heart rate variability in real-time. A technical open-source framework is outlined, involving mobile devices for collection of heart rate data, feature extraction and execution of data mining or machine learning algorithms that ultimately deliver mobile health interventions tailored to the users. Eleven volunteers participated in the empirical evaluation that was carried out using an existing mobile virtual reality application for mental health and under controlled slow-paced breathing exercises. The results validated the feasibility of implementation of the proposed framework in the stages of signal acquisition and real-time calculation of heart rate variability (HRV). The analysis of data regarding packet loss, peak detection and overall system performance provided considerations to enhance the real-time calculation of HRV features. Further studies are planned to validate all the stages of the proposed framework.

-

Open-Source Physiological Computing Framework using Heart Rate Variability in Mobile Virtual Reality Applications

2019. Luis Quintero, Panagiotis Papapetrou, John E. Muñoz. 2019 IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR)

ConferenceRead more about Open-Source Physiological Computing Framework using Heart Rate Variability in Mobile Virtual Reality ApplicationsElectronic and mobile health technologies are posed as a tool that can promote self-care and extend coverage to bridge the gap in accessibility to mental care services between low-and high-income communities. However, the current technology-based mental health interventions use systems that are either cumbersome, expensive or require specialized knowledge to be operated. This paper describes the open-source framework PARE-VR, which provides heart rate variability (HRV) analysis to mobile virtual reality (VR) applications. It further outlines the advantages of the presented architecture as an initial step to provide more scalable mental health therapies in comparison to current technical setups; and as an approach with the capability to merge physiological data and artificial intelligence agents to provide computing systems with user understanding and adaptive functionalities. Furthermore, PARE-VR is evaluated with a feasibility study using a specific relaxation exercise with slow-paced breathing. The aim of the study is to get insights of the system performance, its capability to detect HRV metrics in real-time, as well as to identify changes between normal and slow-paced breathing using the HRV data. Preliminary results of the study, with the participation of eleven volunteers, showed high engagement of users towards the VR activity, and demonstrated technical potentialities of the framework to create physiological computing systems using mobile VR and wearable smartwatches for scalable health interventions. Several insights and recommendations were concluded from the study for enhancing the HRV analysis in real-time and conducting future similar studies.

Show all publications by Luis Quintero at Stockholm University

$presentationText