Sindri MagnússonUniversitetslektor, docent

Om mig

Jag erhöll en kandidatexamen (B.Sc.) i matematik från University of Iceland, Reykjavík, Island, 2011, en masterexamen (M.Sc.) i tillämpad matematik (optimering och systemteori) från Kungliga Tekniska högskolan (KTH), Stockholm, Sverige, 2013, samt en doktorsexamen (Ph.D.) i elektroteknik från samma institution 2017. Jag var postdoktoral forskare vid Harvard University 2018–2019 och gästforskare som doktorand vid Harvard under nio månader 2015–2016.

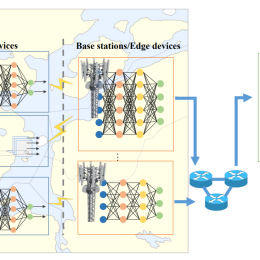

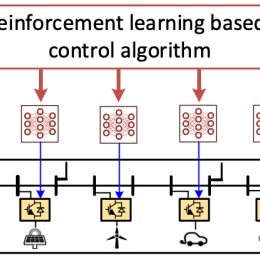

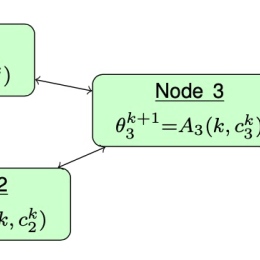

Min forskning ligger brett inom artificiell intelligens och maskininlärning, med särskilt fokus på datadrivna beslut och operationer i komplexa system såsom cyber-fysiska och socio-tekniska nätverk. Detta omfattar utveckling av metoder och teori för inlärning, styrning och optimering som möjliggör tillförlitlig och effektiv drift av storskaliga sammankopplade system. Inom detta bredare sammanhang studerar jag hur flera agenter kan lära sig och anpassa sig i samarbetsmiljöer, och utvecklar algoritmer för multi-agent-inlärning, distribuerat beslutsfattande och förstärkningsinlärning. Med denna grund har min forskning på senare tid även utvidgats till rättvisa och bias i AI-beslutsfattande, där jag undersöker hur algoritmiska val oavsiktligt kan missgynna vissa grupper och hur principbaserade metoder kan utformas för att motverka dessa effekter. Genom att kombinera inlärningsteori med systemtillämpningar strävar jag efter att utveckla AI som är skalbar, effektiv och socialt ansvarstagande.

Jag har omfattande erfarenhet som projektledare, bland annat som huvudansvarig forskare (Principal Investigator) för större forskningsprojekt såsom Vetenskapsrådets (VR) Starting Grant Resource Constrained Machine Learning in Complex Networks (4 MSEK), VR-projektet Federated Reinforcement Learning: Algorithms and Theoretical Foundations (4 MSEK), samt Vinnova-projektet Smart Converters for Climate-neutral Society: Artificial Intelligence-based Control and Coordination (7 MSEK). Som handledare erhöll jag Best Student Paper Award vid IEEE ICASSP 2019. För närvarande är jag Associate Editor för IEEE/ACM Transactions on Networking och har varit medlem i programkommittén för flera ledande konferenser inom reglerteknik och maskininlärning, inklusive IEEE INFOCOM.

Jag har författat över 70 refereegranskade publikationer, inklusive arbete publicerat i ledande AI- och maskininlärningsforum såsom NeurIPS, ICML, AAAI och Transactions on Machine Learning Research, liksom i välrenommerade tidskrifter och konferenser inom signaler, system och kommunikation, samt inom AI-drivna operationer i distribuerade system och cyber-fysiska system, inklusive energisystem och Internet of Things (IoT).

Handledning är en central del av mitt akademiska arbete, både som ett ansvar och som en inspirationskälla. Jag har handlett fler än 30 masteruppsatser inom datavetenskap, artificiell intelligens och närliggande områden. På doktorandnivå har jag haft förmånen att handleda flera mycket talangfulla doktorander, som huvudhandledare för Ali Beikmohammadi, Shubham Vaishnav, Mohsen Amiri, Guilherme Dinis Junior och Alireza Heshmati, samt som bihandledare för Lida Huang (examineras 2025), Zahra Kharazian, Sayeh Sobhani och Alfreds Lapkovskis. Deras projekt omfattar områden som multi-agent-inlärning, federerad inlärning, prediktivt underhåll, förstärkningsinlärning samt rättvisa och bias i AI-beslutsfattande, med tillämpningar i socio-teknologiska och cyber-fysiska system. Tillsammans bidrar dessa arbeten till att utveckla både de teoretiska grunderna för AI och dess praktiska tillämpningar i komplexa, verkliga domäner.

Undervisning

Jag undervisar främst inom datavetenskap och artificiell intelligens på avancerad nivå, men bidrar även till undervisning på grundnivå. Jag är kursansvarig för Abstrakta maskiner och formella språk (7,5 hp) på grundnivå samt för Reinforcement Learning (7,5 hp) på avancerad nivå. Därutöver undervisar jag i flera andra kurser på avancerad nivå, bland annat Research Topics in Data Science (7,5 hp), Machine Learning (7,5 hp) och Current Research and Trends in Health Informatics (7,5 hp), den sistnämnda inom det gemensamma masterprogrammet i hälsoinformatik mellan Stockholms universitet och Karolinska Institutet.

Forskningsprojekt

Publikationer

I urval från Stockholms universitets publikationsdatabas

-

Communication-Adaptive Gradient Sparsification for Federated Learning with Error Compensation

2025. Shubham Vaishnav, Sarit Khirirat, Sindri Magnússon. IEEE Internet of Things Journal 12 (2), 1137-1152

ArtikelLäs mer om Communication-Adaptive Gradient Sparsification for Federated Learning with Error CompensationFederated learning has emerged as a popular distributed machine-learning paradigm. It involves many rounds of iterative communication between nodes to exchange model parameters. With the increasing complexity of ML tasks, the models can be large, having millions of parameters. Moreover, edge and IoT nodes often have limited energy resources and channel bandwidths. Thus, reducing the communication cost in Federated Learning is a bottleneck problem. This cost could be in terms of energy consumed, delay involved, or amount of data communicated. We propose a communication cost-adaptive model sparsification for Federated Learning with Error Compensation. The central idea is to adapt the sparsification level in run-time by optimizing the ratio between the impact of the communicated model parameters and communication cost. We carry out a detailed convergence analysis to establish the theoretical foundations of the proposed algorithm. We conduct extensive experiments to train both convex and non-convex machine learning models on a standard dataset. We illustrate the efficiency of the proposed algorithm by comparing its performance with three baseline schemes. The performance of the proposed algorithm is validated for two communication models and three cost functions. Simulation results show that the proposed algorithm needs a substantially less amount of communication than the three baseline schemes while achieving the best accuracy and fastest convergence. The results are consistent for all the considered cost models, cost functions, and ML models. Thus, the proposed FL-CATE algorithm can substantially improve the communication efficiency of federated learning, irrespective of the ML tasks, costs, and communication models.

-

Crowding distance and IGD-driven grey wolf reinforcement learning approach for multi-objective agile earth observation satellite scheduling

2025. He Wang (et al.). International Journal of Digital Earth 18 (1)

ArtikelLäs mer om Crowding distance and IGD-driven grey wolf reinforcement learning approach for multi-objective agile earth observation satellite schedulingWith the rise of low-cost launches, miniaturized space technology, and commercialization, the cost of space missions has dropped, leading to a surge in flexible Earth observation satellites. This increased demand for complex and diverse imaging products requires addressing multi-objective optimization in practice. To this end, we propose a multi-objective agile Earth observation satellite scheduling problem (MOAEOSSP) model and introduce a reinforcement learning-based multi-objective grey wolf optimization (RLMOGWO) algorithm. It aims to maximize observation efficiency while minimizing energy consumption. During population initialization, the algorithm uses chaos mapping and opposition-based learning to enhance diversity and global search, reducing the risk of local optima. It integrates Q-learning into an improved multi-objective grey wolf optimization framework, designing state-action combinations that balance exploration and exploitation. Dynamic parameter adjustments guide position updates, boosting adaptability across different optimization stages. Moreover, the algorithm introduces a reward mechanism based on the crowding distance and inverted generational distance (IGD) to maintain Pareto front diversity and distribution, ensuring a strong multi-objective optimization performance. The experimental results show that the algorithm excels at solving the MOAEOSSP, outperforming competing algorithms across several metrics and demonstrating its effectiveness for complex optimization problems.

-

On the Convergence of Federated Learning Algorithms Without Data Similarity

2025. Ali Beikmohammadi, Sarit Khirirat, Sindri Magnússon. IEEE Transactions on Big Data 11 (2), 659-668

ArtikelLäs mer om On the Convergence of Federated Learning Algorithms Without Data SimilarityData similarity assumptions have traditionally been relied upon to understand the convergence behaviors of federated learning methods. Unfortunately, this approach often demands fine-tuning step sizes based on the level of data similarity. When data similarity is low, these small step sizes result in an unacceptably slow convergence speed for federated methods. In this paper, we present a novel and unified framework for analyzing the convergence of federated learning algorithms without the need for data similarity conditions. Our analysis centers on an inequality that captures the influence of step sizes on algorithmic convergence performance. By applying our theorems to well-known federated algorithms, we derive precise expressions for three widely used step size schedules: fixed, diminishing, and step-decay step sizes, which are independent of data similarity conditions. Finally, we conduct comprehensive evaluations of the performance of these federated learning algorithms, employing the proposed step size strategies to train deep neural network models on benchmark datasets under varying data similarity conditions. Our findings demonstrate significant improvements in convergence speed and overall performance, marking a substantial advancement in federated learning research.

-

Parallel Momentum Methods Under Biased Gradient Estimations

2025. Ali Beikmohammadi, Sarit Khirirat, Sindri Magnússon. IEEE Transactions on Control of Network Systems

ArtikelLäs mer om Parallel Momentum Methods Under Biased Gradient EstimationsParallel stochastic gradient methods are gaining prominence in solving large-scale machine learning problems that involve data distributed across multiple nodes. However, obtaining unbiased stochastic gradients, which have been the focus of most theoretical research, is challenging in many distributed machine learning applications. The gradient estimations easily become biased, for example, when gradients are compressed or clipped, when data is shuffled, and in meta-learning and reinforcement learning. In this work, we establish worst-case bounds on parallel momentum methods under biased gradient estimation on both general non-convex and μ-PL non-convex problems. Our analysis covers general distributed optimization problems, and we work out the implications for special cases where gradient estimates are biased, i.e. in meta-learning and when the gradients are compressed or clipped. Our numerical experiments verify our theoretical findings and show faster convergence performance of momentum methods than traditional biased gradient descent.

-

SCANIA Component X dataset: a real-world multivariate time series dataset for predictive maintenance

2025. Zahra Kharazian (et al.). Scientific Data 12

ArtikelLäs mer om SCANIA Component X datasetPredicting failures and maintenance time in predictive maintenance is challenging due to the scarcity of comprehensive real-world datasets, and among those available, few are of time series format. This paper introduces a real-world, multivariate time series dataset collected exclusively from a single anonymized engine component (Component X) across a fleet of SCANIA trucks. The dataset includes operational data, repair records, and specifications related to Component X while maintaining confidentiality through anonymization. It is well-suited for a range of machine learning applications, including classification, regression, survival analysis, and anomaly detection, particularly in predictive maintenance scenarios. The dataset’s large population size, diverse features (in the form of histograms and numerical counters), and temporal information make it a unique resource in the field. The objective of releasing this dataset is to give a broad range of researchers the possibility of working with real-world data from an internationally well-known company and introduce a standard benchmark to the predictive maintenance field, fostering reproducible research.

-

A Strategy Fusion-Based Multiobjective Optimization Approach for Agile Earth Observation Satellite Scheduling Problem

2024. He Wang (et al.). IEEE Transactions on Geoscience and Remote Sensing 62, 1-14

ArtikelLäs mer om A Strategy Fusion-Based Multiobjective Optimization Approach for Agile Earth Observation Satellite Scheduling ProblemAgile satellite imaging scheduling plays a vital role in improving emergency response, urban planning, national defense, and resource management. With the rise in the number of in-orbit satellites and observation windows, the need for diverse agile Earth observation satellite (AEOS) scheduling has surged. However, current research seldom addresses multiple optimization objectives, which are crucial in many engineering practices. This article tackles a multiobjective AEOS scheduling problem (MOAEOSSP) that aims to optimize total observation task profit, satellite energy consumption, and load balancing. To address this intricate problem, we propose a strategy-fused multiobjective dung beetle optimization (SFMODBO) algorithm. This novel algorithm harnesses the position update characteristics of various dung beetle populations and integrates multiple high-adaptability strategies. Consequently, it strikes a better balance between global search capability and local exploitation accuracy, making it more effective at exploring the solution space and avoiding local optima. The SFMODBO algorithm enhances global search capabilities through diverse strategies, ensuring thorough coverage of the search space. Simultaneously, it significantly improves local optimization precision by fine-tuning solutions in promising regions. This dual approach enables more robust and efficient problem-solving. Simulation experiments confirm the effectiveness and efficiency of the SFMODBO algorithm. Results indicate that it significantly outperforms competitors across multiple metrics, achieving superior scheduling schemes. In addition to these enhanced metrics, the proposed algorithm also exhibits advantages in computation time and resource utilization. This not only demonstrates the algorithm’s robustness but also underscores its efficiency and speed in solving the MOAEOSSP.

-

Accelerating actor-critic-based algorithms via pseudo-labels derived from prior knowledge

2024. Ali Beikmohammadi, Sindri Magnússon. Information Sciences 661

ArtikelLäs mer om Accelerating actor-critic-based algorithms via pseudo-labels derived from prior knowledgeDespite the huge success of reinforcement learning (RL) in solving many difficult problems, its Achilles heel has always been sample inefficiency. On the other hand, in RL, taking advantage of prior knowledge, intentionally or unintentionally, has usually been avoided, so that, training an agent from scratch is common. This not only causes sample inefficiency but also endangers safety –especially during exploration. In this paper, we help the agent learn from the environment by using the pre-existing (but not necessarily exact or complete) solution for a task. Our proposed method can be integrated with any RL algorithm developed based on policy gradient and actor-critic methods. The results on five tasks with different difficulty levels by using two well-known actor-critic-based methods as the backbone of our proposed method (SAC and TD3) show our success in greatly improving sample efficiency and final performance. We have gained these results alongside robustness to noisy environments at the cost of just a slight computational overhead, which is negligible.

-

CoPAL: Conformal Prediction for Active Learning with Application to Remaining Useful Life Estimation in Predictive Maintenance

2024. Zahra Kharazian (et al.). Proceedings of Machine Learning Research, 195-217

KonferensLäs mer om CoPALActive learning has received considerable attention as an approach to obtain high predictive performance while minimizing the labeling effort. A central component of the active learning framework concerns the selection of objects for labeling, which are used for iteratively updating the underlying model. In this work, an algorithm called CoPAL (Conformal Prediction for Active Learning) is proposed, which makes the selection of objects within active learning based on the uncertainty as quantified by conformal prediction. The efficacy of CoPAL is investigated by considering the task of estimating the remaining useful life (RUL) of assets in the domain of predictive maintenance (PdM). Experimental results

are presented, encompassing diverse setups, including different models, sample selection criteria, conformal predictors, and datasets, using root mean squared error (RMSE) as the primary evaluation metric while also reporting prediction interval sizes over the iterations. The comprehensive analysis confirms the positive effect of using CoPAL for improving predictive performance

-

Compressed Federated Reinforcement Learning with a Generative Model

2024. Ali Beikmohammadi, Sarit Khirirat, Sindri Magnússon. Machine Learning and Knowledge Discovery in Databases. Research Track, 20-37

KonferensLäs mer om Compressed Federated Reinforcement Learning with a Generative ModelReinforcement learning has recently gained unprecedented popularity, yet it still grapples with sample inefficiency. Addressing this challenge, federated reinforcement learning (FedRL) has emerged, wherein agents collaboratively learn a single policy by aggregating local estimations. However, this aggregation step incurs significant communication costs. In this paper, we propose CompFedRL, a communication-efficient FedRL approach incorporating both \textit{periodic aggregation} and (direct/error-feedback) compression mechanisms. Specifically, we consider compressed federated Q-learning with a generative model setup, where a central server learns an optimal Q-function by periodically aggregating compressed Q-estimates from local agents. For the first time, we characterize the impact of these two mechanisms (which have remained elusive) by providing a finite-time analysis of our algorithm, demonstrating strong convergence behaviors when utilizing either direct or error-feedback compression. Our bounds indicate improved solution accuracy concerning the number of agents and other federated hyperparameters while simultaneously reducing communication costs. To corroborate our theory, we also conduct in-depth numerical experiments to verify our findings, considering Top-K and Sparsified-K sparsification operators.

-

Data Driven Decentralized Control of Inverter based Renewable Energy Sources using Safe Guaranteed Multi-Agent Deep Reinforcement Learning

2024. Mengfan Zhang (et al.). IEEE Transactions on Sustainable Energy 15 (2), 1288-1299

ArtikelLäs mer om Data Driven Decentralized Control of Inverter based Renewable Energy Sources using Safe Guaranteed Multi-Agent Deep Reinforcement LearningThe wide integration of inverter based renewable energy sources (RESs) in modern grids may cause severe voltage violation issues due to high stochastic fluctuations of RESs. Existing centralized approaches can achieve optimal results for voltage regulation, but they have high communication burdens; existing decentralized methods only require local information, but they cannot achieve optimal results. Deep reinforcement learning (DRL) based methods are effective to deal with uncertainties, but it is difficult to guarantee secure constraints in existing DRL training. To address the above challenges, this paper proposes a projection embedded multi-agent DRL algorithm to achieve decentralized optimal control of distribution grids with guaranteed 100% safety. The safety of the DRL training is guaranteed via an embedded safe policy projection, which could smoothly and effectively restrict the DRL agent action space, and avoid any violation of physical constraints in distribution grid operations. The multi-agent implementation of the proposed

algorithm enables the optimal solution achieved in a decentralized manner that does not require real-time communication for practical deployment. The proposed method is tested in modified IEEE 33-bus distribution and compared with existing methods; the results validate the effectiveness of the proposed method in achieving decentralized optimal control with guaranteed 100% safety and without the requirement of real-time communications

-

Multiobjective and Constrained Reinforcement Learning for IoT

2024. Shubham Vaishnav, Sindri Magnússon. Learning techniques for Internet of Things, 153-170

KapitelLäs mer om Multiobjective and Constrained Reinforcement Learning for IoTIoT networks of the future will be characterized by autonomous decision-making by individual devices. Decision-making is done with the purpose of optimizing certain objectives. A multitude of mathematically oriented algorithms exist for solving optimization problems. However, optimization in IoT networks is challenging due to a number of uncertainties, complex network topologies, and rapid changes in the environment. This makes the data-driven and machine learning (ML) approaches more suitable for effectively handling IoT environments’ dynamic and intricate nature. However, supervised and unsupervised ML approaches depend on training data, which is not always available before training. In recent years, reinforcement learning (RL) has attracted considerable attention for solving optimization problems in IoT. This is because RL has the distinguishing feature of learning with experience while interacting with the environment without training data. A central challenge in decision-making in IoT networks is that most optimization problems consist of co-optimizing multiple conflicting objectives. With the development of multi-objective RL (MORL) approaches over the last two decades, there is great potential for utilizing them for future IoT networks. Most recently developed MORL approaches have not been applied in the IoT domain. In this chapter, we will discuss the need for efficient multi-objective optimization in IoT, the fundamentals of using RL for decision-making in IoT, an overview of existing MORL approaches, and, finally, the future scope and challenges associated with utilizing MORL for IoT.

-

On the Convergence of TD-Learning on Markov Reward Processes with Hidden States

2024. Mohsen Amiri, Sindri Magnússon. European Control Conference (ECC), 2097-2104

KonferensLäs mer om On the Convergence of TD-Learning on Markov Reward Processes with Hidden StatesWe investigate the convergence properties of Temporal Difference (TD) Learning on Markov Reward Processes (MRPs) with new structures for incorporating hidden state information. In particular, each state is characterized by both observable and hidden components, with the assumption that the observable and hidden parts are statistically independent. This setup differs from Hidden Markov Models and Partially Observable Markov Decision Models, in that here it is not possible to infer the hidden information from the state observations. Nevertheless, the hidden state influences the MRP through the rewards, rendering the reward sequence non-Markovian. We prove that TD learning, when applied only on the observable part of the states, converges to a fixed point under mild assumptions on the step-size. Furthermore, we characterize this fixed point in terms of the statistical properties of both the Markov chains representing the observable and hidden parts of the states. Beyond the theoretical results, we illustrate the novel structure on two application setups in communications. Furthermore, we validate our results through experimental evidence, showcasing the convergence of the algorithm in practice.

-

Policy Control with Delayed, Aggregate, and Anonymous Feedback

2024. Guilherme Dinis Chaliane Junior, Sindri Magnússon, Jaakko Hollmén. Machine Learning and Knowledge Discovery in Databases. Research Track: European Conference, ECML PKDD 2024, Vilnius, Lithuania, September 9–13, 2024, Proceedings, Part VI, 389-406

KonferensLäs mer om Policy Control with Delayed, Aggregate, and Anonymous FeedbackReinforcement learning algorithms have a dependency on observing rewards for actions taken. The relaxed setting of having fully observable rewards, however, can be infeasible in certain scenarios, due to either cost or the nature of the problem. Of specific interest here is the challenge of learning a policy when rewards are delayed, aggregated, and anonymous (DAAF). A problem which has been addressed in bandits literature and, to the best of our knowledge, to a lesser extent in the more general reinforcement learning (RL) setting. We introduce a novel formulation that mirrors scenarios encountered in real-world applications, characterized by intermittent and aggregated reward observations. To address these constraints, we develop four new algorithms: one employs least squares for true reward estimation; two and three adapt Q-learning and SARSA, to deal with our unique setting; and the fourth leverages a policy with options framework. Through a thorough and methodical experimental analysis, we compare these methodologies, demonstrating that three of them can approximate policies nearly as effectively as those derived from complete information scenarios, albeit with minimal performance degradation due to informational constraints. Our findings pave the way for more robust RL applications in environments with limited reward feedback.

-

A General Framework to Distribute Iterative Algorithms With Localized Information Over Networks

2023. Thomas Ohlson Timoudas (et al.). IEEE Transactions on Automatic Control 68 (12), 7358-7373

ArtikelLäs mer om A General Framework to Distribute Iterative Algorithms With Localized Information Over NetworksEmerging applications in the Internet of Things (IoT) and edge computing/learning have sparked massive renewed interest in developing distributed versions of existing (centralized) iterative algorithms often used for optimization or machine learning purposes. While existing work in the literature exhibits similarities, for the tasks of both algorithm design and theoretical analysis, there is still no unified method or framework for accomplishing these tasks. This article develops such a general framework for distributing the execution of (centralized) iterative algorithms over networks in which the required information or data is partitioned between the nodes in the network. This article furthermore shows that the distributed iterative algorithm, which results from the proposed framework, retains the convergence properties (rate) of the original (centralized) iterative algorithm. In addition, this article applies the proposed general framework to several interesting example applications, obtaining results comparable to the state of the art for each such example, while greatly simplifying and generalizing their convergence analysis. These example applications reveal new results for distributed proximal versions of gradient descent, the heavy ball method, and Newton's method. For example, these results show that the dependence on the condition number for the convergence rate of this distributed heavy ball method is at least as good as that of centralized gradient descent.

-

AID4HAI: Automatic Idea Detection for Healthcare-Associated Infections from Twitter, A Framework based on Active Learning and Transfer Learning

2023. Zahra Kharazian (et al.). 35th Annual Workshop of the Swedish Artificial Intelligence Society SAIS 2023

KonferensLäs mer om AID4HAIThis study is a collaboration between data scientists, innovation management researchers from academia, and experts from a hygiene and health company. The study aims to develop an automatic idea detection package to control and prevent healthcare-associated infections (HAI) by extracting informative ideas from social media using Active Learning and Transfer Learning. The proposed package includes a dataset collected from Twitter, expert-created labels, and an annotation framework. Transfer Learning has been used to build a twostep deep neural network model that gradually extracts the semantic representation of the text data using the BERTweet language model in the first step. In the second step, the model classifies the extracted representations as informative or non-informative using a multi-layer perception (MLP). The package is named AID4HAI (Automatic Idea Detection for controlling and preventing Healthcare-Associated Infections) and is publicly available on GitHub.

-

AID4HAI: Automatic Idea Detection for Healthcare-Associated Infections from Twitter, A Framework based on Active Learning and Transfer Learning

2023. Zahra Kharazian (et al.). Advances in Intelligent Data Analysis XXI, 195-207

KonferensLäs mer om AID4HAIThis research is an interdisciplinary work between data scientists, innovation management researchers and experts from Swedish academia and a hygiene and health company. Based on this collaboration, we have developed a novel package for automatic idea detection with the motivation of controlling and preventing healthcare-associated infections (HAI). The principal idea of this study is to use machine learning methods to extract informative ideas from social media to assist healthcare professionals in reducing the rate of HAI. Therefore, the proposed package offers a corpus of data collected from Twitter, associated expert-created labels, and software implementation of an annotation framework based on the Active Learning paradigm. We employed Transfer Learning and built a two-step deep neural network model that incrementally extracts the semantic representation of the collected text data using the BERTweet language model in the first step and classifies these representations as informative or non-informative using a multi-layer perception (MLP) in the second step. The package is called AID4HAI (Automatic Idea Detection for controlling and preventing Healthcare-Associated Infections) and is made fully available (software code and the collected data) through a public GitHub repository. We believe that sharing our ideas and releasing these ready-to-use tools contributes to the development of the field and inspires future research.

-

Adaptive Hyperparameter Selection for Differentially Private Gradient Descent

2023. Dominik Fay (et al.). Transactions on Machine Learning Research (9)

ArtikelLäs mer om Adaptive Hyperparameter Selection for Differentially Private Gradient DescentWe present an adaptive mechanism for hyperparameter selection in differentially private optimization that addresses the inherent trade-off between utility and privacy. The mechanism eliminates the often unstructured and time-consuming manual effort of selecting hyperpa- rameters and avoids the additional privacy costs that hyperparameter selection otherwise incurs on top of that of the actual algorithm.

We instantiate our mechanism for noisy gradient descent on non-convex, convex and strongly convex loss functions, respectively, to derive schedules for the noise variance and step size. These schedules account for the properties of the loss function and adapt to convergence metrics such as the gradient norm. When using these schedules, we show that noisy gradient descent converges at essentially the same rate as its noise-free counterpart. Numerical experiments show that the schedules consistently perform well across a range of datasets without manual tuning.

-

Comparing NARS and Reinforcement Learning: An Analysis of ONA and Q-Learning Algorithms

2023. Ali Beikmohammadi, Sindri Magnússon. Artificial General Intelligence, 21-31

KonferensLäs mer om Comparing NARS and Reinforcement LearningIn recent years, reinforcement learning (RL) has emerged as a popular approach for solving sequence-based tasks in machine learning. However, finding suitable alternatives to RL remains an exciting and innovative research area. One such alternative that has garnered attention is the Non-Axiomatic Reasoning System (NARS), which is a general-purpose cognitive reasoning framework. In this paper, we delve into the potential of NARS as a substitute for RL in solving sequence-based tasks. To investigate this, we conduct a comparative analysis of the performance of ONA as an implementation of NARS and Q-Learning in various environments that were created using the Open AI gym. The environments have different difficulty levels, ranging from simple to complex. Our results demonstrate that NARS is a promising alternative to RL, with competitive performance in diverse environments, particularly in non-deterministic ones.

-

Delay-Agnostic Asynchronous Distributed Optimization

2023. Xuyang Wu (et al.). CDC 2023 Singapore, 1082-1087

KonferensLäs mer om Delay-Agnostic Asynchronous Distributed OptimizationExisting asynchronous distributed optimization algorithms often use diminishing step-sizes that cause slow practical convergence, or fixed step-sizes that depend on an assumed upper bound of delays. Not only is such a delay bound hard to obtain in advance, but it is also large and therefore results in unnecessarily slow convergence. This paper develops asynchronous versions of two distributed algorithms, DGD and DGD-ATC, for solving consensus optimization problems over undirected networks. In contrast to alternatives, our algorithms can converge to the fixed point set of their synchronous counterparts using step-sizes that are independent of the delays. We establish convergence guarantees under both partial and total asynchrony. The practical performance of our algorithms is demonstrated by numerical experiments.

-

Delay-agnostic Asynchronous Coordinate Update Algorithm

2023. Xuyang Wu (et al.). ICML'23, 37582-37606

KonferensLäs mer om Delay-agnostic Asynchronous Coordinate Update AlgorithmWe propose a delay-agnostic asynchronous coordinate update algorithm (DEGAS) for computing operator fixed points, with applications to asynchronous optimization. DEGAS includes novel asynchronous variants of ADMM and block-coordinate descent as special cases. We prove that DEGAS converges with both bounded and unbounded delays under delay-free parameter conditions. We also validate by theory and experiments that DEGAS adapts well to the actual delays. The effectiveness of DEGAS is demonstrated by numerical experiments on classification problems.

-

Distributed safe resource allocation using barrier functions

2023. Xuyang Wu, Sindri Magnússon, Mikael Johansson. Automatica 153

ArtikelLäs mer om Distributed safe resource allocation using barrier functionsResource allocation plays a central role in networked systems such as smart grids, communication networks, and urban transportation systems. In these systems, many constraints have physical meaning and infeasible allocations can result in a system breakdown. Hence, algorithms with asymptotic feasibility guarantees can be insufficient since they cannot ensure that an implementable solution is found in finite time. This paper proposes a distributed feasible method (DFM) for resource allocation based on barrier functions. In DFM, every iterate is feasible and safe to implement since it does not violate the physical constraints. We prove that, under mild conditions, DFM converges to an arbitrarily small neighborhood of the optimum. Numerical experiments demonstrate the competitive performance

of DFM.

-

Energy-Efficient and Adaptive Gradient Sparsification for Federated Learning

2023. Shubham Vaishnav, Maria Efthymiou, Sindri Magnússon. IEEE International Conference on Communications (ICC), 2023, 1256-1261

KonferensLäs mer om Energy-Efficient and Adaptive Gradient Sparsification for Federated LearningFederated learning is an emerging machine-learning technique that trains an algorithm across multiple decentralized edge devices or clients holding local data samples. It involves training local models on local data and uploading model parameters to a server node at regular intervals to generate a global model which is transmitted to all clients. However, edge nodes often have limited energy resources, and hence performing energy-efficient communication of model parameters is a bottleneck problem. We propose an energy-adaptive model sparsification for Federated Learning. The central idea is to adapt the sparsification level in run-time by optimizing the ratio between information content and energy cost. We illustrate the efficiency of the proposed algorithm by comparing its performance with three baseline schemes. We validate the performance of the proposed algorithm for two cost models. Simulation results show that the proposed algorithm needs exponentially less amount of communication and energy as compared to the three baseline schemes while achieving the best accuracy and fastest convergence.

-

Eyes can draw: A high-fidelity free-eye drawing method with unimodal gaze control

2023. Lida Huang (et al.). International journal of human-computer studies 170

ArtikelLäs mer om Eyes can drawEyeCompass is a novel free-eye drawing system enabling high-fidelity and efficient free-eye drawing through unimodal gaze control, addressing the bottlenecks of gaze-control drawing. EyeCompass helps people to draw using only their eyes, which is of value to people with motor disabilities. Currently, there is no effective gaze-control drawing application due to multiple challenges including involuntary eye movements, conflicts between visuomotor transformation and ocular observation, gaze trajectory control, and inherent eye-tracking errors. EyeCompass addresses this using two initial gaze-control drawing mechanisms: brush damping dynamics and the gaze-oriented method. The user experiments compare the existing gaze-control drawing method and EyeCompass, showing significant improvements in the drawing performance of the mechanisms concerned. The field study conducted with motor-disabled people produced various creative graphics and indicates good usability of the system. Our studies indicate that EyeCompass is a high-fidelity, accurate, feasible free-eye drawing method for creating artistic works via unimodal gaze control.

-

Improved Step-Size Schedules for Proximal Noisy Gradient Methods

2023. Sarit Khirirat (et al.). IEEE Transactions on Signal Processing 71, 189-201

ArtikelLäs mer om Improved Step-Size Schedules for Proximal Noisy Gradient MethodsNoisy gradient algorithms have emerged as one of the most popular algorithms for distributed optimization with massive data. Choosing proper step-size schedules is an important task to tune in the algorithms for good performance. For the algorithms to attain fast convergence and high accuracy, it is intuitive to use large step-sizes in the initial iterations when the gradient noise is typically small compared to the algorithm-steps, and reduce the step-sizes as the algorithm progresses. This intuition has been confirmed in theory and practice for stochastic gradient descent. However, similar results are lacking for other methods using approximate gradients. This paper shows that the diminishing step-size strategies can indeed be applied for a broad class of noisy gradient algorithms. Our analysis framework is based on two classes of systems that characterize the impact of the step-sizes on the convergence performance of many algorithms. Our results show that such step-size schedules enable these algorithms to enjoy the optimal rate. We exemplify our results on stochastic compression algorithms. Our experiments validate fast convergence of these algorithms with the step decay schedules.

-

Improving and Analyzing Sketchy High-Fidelity Free-Eye Drawing

2023. Lida Huang (et al.). Proceedings of the 2023 ACM Designing Interactive Systems Conference, 856-870

KonferensLäs mer om Improving and Analyzing Sketchy High-Fidelity Free-Eye DrawingSome people with a motor disability that limits hand movements use technology to draw via their eyes. Free-eye drawing has been re-investigated recently and yielded state-of-the-art results via unimodal gaze control. However, limitations remain, including limited functions, conflicts between observation and drawing, and the brush tailing issue. We introduce a professional unimodal gaze control free-eye drawing application and improve upon free-eye drawing by extended gaze-based user interface functions, improved brush dynamics, and a double-blink gaze gesture. An experiment and a field study were conducted to assess the system’s usability compared to the mainstream gaze-control drawing method and hand drawing and the accessibility among users with motor disabilities. The results showed that the application provides efficient interaction and the ability to create hand-sketch-level graphics for people with motor disabilities. Herein, we contribute a robust and professional free-eye drawing application, detailing valuable design considerations for future developments in gaze interaction.

-

Intelligent Processing of Data Streams on the Edge Using Reinforcement Learning

2023. Shubham Vaishnav, Sindri Magnússon. 2023 IEEE International Conference on Communications Workshops (ICC Workshops)

KonferensLäs mer om Intelligent Processing of Data Streams on the Edge Using Reinforcement LearningA key challenge in many IoT applications is to en-sure energy efficiency while processing large amounts of streaming data at the edge. Nodes often need to process time-sensitive data using limited computing and communication resources. To that end, we design a novel R - Learning based Offloading framework, RLO, that allows edge nodes to learn energy optimal decisions from experience regarding processing incoming data streams. In particular, when should the node process data locally? When should it transmit data to be processed by a fog node? And when should it store data for later processing? We validate our results on both real and simulated data streams. Simulation results show that RLO learns with time to achieve better overall-rewards with respect to three existing baseline schemes. Moreover, the proposed algorithm excels the existing baseline schemes when different priorities were set on the two objectives. We also illustrate how to adjust the priorities of the two objectives based on the application requirements and network constraints.

-

Revisiting the Curvature-aided IAG: Improved Theory and Reduced Complexity

2023. Erik Berglund (et al.). IFAC-PapersOnLine 56 (2), 5221-5226

ArtikelLäs mer om Revisiting the Curvature-aided IAGThe curvature-aided IAG (CIAG) algorithm is an efficient asynchronous optimization method that accelerates IAG using a delay compensation technique. However, existing step-size rules for CIAG are conservative and hard to implement, and the Hessian computation in CIAG is often computationally expensive. To alleviate these issues, we first provide an easy-to-implement and less conservative step-size rule for CIAG. Next, we propose a modified CIAG algorithm that reduces the computational complexity by approximating the Hessian with a constant matrix. Convergence results are derived for each algorithm on both convex and strongly convex problems, and numerical experiments on logistic regression demonstrate their effectiveness in practice.

-

Robust Contrastive Learning and Multi-shot Voting for High-dimensional Multivariate Data-driven Prognostics

2023. Kaiji Sun (et al.). 2023 IEEE International Conference on Prognostics and Health Management (ICPHM), 53-60

KonferensLäs mer om Robust Contrastive Learning and Multi-shot Voting for High-dimensional Multivariate Data-driven PrognosticsThe availability of data gathered from industrial sensors has increased expeditiously in recent years. These data are valuable assets in delivering exceptional services for manufacturing enterprises. We see growing interests and expectations from manufacturers in deploying artificial intelligence for predictive maintenance. The paper has adopted and transferred a state-of-the-art method from few-shot learning to failure prognostics using the Siamese neural network based contractive learning. The method has three main characteristics on top of the highest performance - a sensitivity of 98.4% for Scania truck's air pressure system failure capture, compared to the methods proposed by the previous related research: prediction stability, deployment flexibility, and the robust multi-shot diagnosis based on selected historical reference samples

-

TA-Explore: Teacher-Assisted Exploration for Facilitating Fast Reinforcement Learning: Extended Abstract

2023. Ali Beikmohammadi, Sindri Magnússon. AAMAS '23: Proceedings of the 2023 International Conference on Autonomous Agents and Multiagent Systems, 2412-2414

KonferensLäs mer om TA-ExploreReinforcement Learning (RL) is crucial for data-driven decision-making but suffers from sample inefficiency. This poses a risk to system safety and can be costly in real-world environments with physical interactions. This paper proposes a human-inspired framework to improve the sample efficiency of RL algorithms, which gradually provides the learning agent with simpler but similar tasks that progress toward the main task. The proposed method does not require pre-training and can be applied to any goal, environment, and RL algorithm, including value-based and policy-based methods, as well as tabular and deep-RL methods. The framework is evaluated on a Random Walk and optimal control problem with constraint, showing good performance in improving the sample efficiency of RL-learning algorithms.

-

Delay-adaptive step-sizes for asynchronous learning

2022. Xuyang Wu (et al.). Proceedings of the 39th International Conference on Machine Learning, 24093-24113

KonferensLäs mer om Delay-adaptive step-sizes for asynchronous learningIn scalable machine learning systems, model training is often parallelized over multiple nodes that run without tight synchronization. Most analysis results for the related asynchronous algorithms use an upper bound on the information delays in the system to determine learning rates. Not only are such bounds hard to obtain in advance, but they also result in unnecessarily slow convergence. In this paper, we show that it is possible to use learning rates that depend on the actual time-varying delays in the system. We develop general convergence results for delay-adaptive asynchronous iterations and specialize these to proximal incremental gradient descent and block coordinate descent algorithms. For each of these methods, we demonstrate how delays can be measured on-line, present delay-adaptive step-size policies, and illustrate their theoretical and practical advantages over the state-of-the-art.

-

Eco-Fedsplit: Federated Learning with Error-Compensated Compression

2022. Sarit Khirirat, Sindri Magnússon, Mikael Johansson. 2022 IEEE International Conference on Acoustics, Speech,and Signal Processing, 5952-5956

KonferensLäs mer om Eco-FedsplitFederated learning is an emerging framework for collaborative machine-learning on devices which do not want to share local data. State-of-the art methods in federated learning reduce the communication frequency, but are not guaranteed to converge to the optimal model parameters. These methods also experience a communication bottleneck, especially when the devices are power-constrained and communicate over a shared medium. This paper presents ECO-FedSplit, an algorithm that increases the communication efficiency of federated learning without sacrificing solution accuracy. The key is to compress inter-device communication and to compensate for information losses in a theoretically justified manner. We prove strong convergence properties of ECO-FedSplit on strongly convex optimization problems and show that the algorithm yields a highly accurate solution with dramatically reduced communication. Extensive numerical experiments validate our theoretical result on real data sets.

-

EpidRLearn: Learning Intervention Strategies for Epidemics with Reinforcement Learning

2022. Maria Bampa (et al.). Artificial Intelligence in Medicine, 189-199

KonferensLäs mer om EpidRLearnEpidemics of infectious diseases can pose a serious threat to public health and the global economy. Despite scientific advances, containment and mitigation of infectious diseases remain a challenging task. In this paper, we investigate the potential of reinforcement learning as a decision making tool for epidemic control by constructing a deep Reinforcement Learning simulator, called EpidRLearn, composed of a contact-based, age-structured extension of the SEIR compartmental model, referred to as C-SEIR. We evaluate EpidRLearn by comparing the learned policies to two deterministic policy baselines. We further assess our reward function by integrating an alternative reward into our deep RL model. The experimental evaluation indicates that deep reinforcement learning has the potential of learning useful policies under complex epidemiological models and large state spaces for the mitigation of infectious diseases, with a focus on COVID-19.

-

Federated learning for iout: Concepts, applications, challenges and future directions

2022. Nancy Victor (et al.). IEEE Internet of Things Magazine (IoT) 5 (4)

ArtikelLäs mer om Federated learning for ioutInternet of Underwater Things (IoUT) have gained rapid momentum over the past decade with applications spanning from environmental monitoring and exploration, defence applications, etc. The traditional IoUT systems use machine learning (ML) approaches which cater the needs of reliability, efficiency and timeliness. However, an extensive review of the various studies conducted highlight the significance of data privacy and security in IoUT frameworks as a predominant factor in achieving desired outcomes in mission critical applications. Federated learning (FL) is a secured, decentralized framework which is a recent development in ML, that can help in fulfilling the challenges faced by conventional ML approaches in IoUT. This article presents an overview of the various applications of FL in IoUT, its challenges, open issues and indicates direction of future research prospects.

-

Interactive Painting Volumetric Cloud Scenes with Simple Sketches Based on Deep Learning

2022. Lida Huang (et al.). 15th International Conference on Human System Interaction (HSI) 2022

KonferensLäs mer om Interactive Painting Volumetric Cloud Scenes with Simple Sketches Based on Deep LearningSynthesizing realistic clouds is a complex and demanding task, as clouds are characterized by random shapes, complex scattering and turbulent appearances. Existing approaches either employ two-dimensional image matting or three-dimensional physical simulations. This paper proposes a novel sketch-to-image deep learning system using fast sketches to paint and edit volumetric clouds. We composed a dataset of 2000 real cloud images and translated simple strokes into authentic clouds based on a conditional generative adversarial network (cGAN). Compared to previous cloud simulation methods, our system demonstrates more efficient and straightforward processes to generate authentic clouds for computer graphics, providing a widely accessible sky scene design approach for use by novices, amateurs, and expert artists.

-

Leakage Localization in Water Distribution Networks: A Model-Based Approach

2022. Ludvig Lindström (et al.). 2022 European Control Conference (ECC), 1515-1520

KonferensLäs mer om Leakage Localization in Water Distribution NetworksThe paper studies the problem of leakage localization in water distribution networks. For the case of a single pipe that suffers from a single leak, by taking recourse to pressure and flow measurements, and assuming those are noiseless, we provide a closed-form expression for leak localization, leak exponent and leak constant. For the aforementioned setting, but with noisy pressure and flow measurements, an expression for estimating the location of the leak is provided. Finally, assuming the existence of a single leak, for a network comprising of more than one pipe and assuming that the network has a tree structure, we provide a systematic procedure for determining the leak location, the leak exponent, and the leak constant.

-

Multi-Agent Deep Reinforcement Learning for Decentralized Voltage-Var Control in Distribution Power System

2022. Mengfan Zhang (et al.). 2022 IEEE Energy Conversion Congress and Exposition (ECCE), 1-5

KonferensLäs mer om Multi-Agent Deep Reinforcement Learning for Decentralized Voltage-Var Control in Distribution Power SystemWith the large integration of renewables, the traditional power system becomes more sustainable and effective. Yet, the fluctuation and uncertainties of renewables have led to large challenges to the voltage stability in distribution power systems. This paper proposes a multi-agent deep reinforcement learning method to address the issue. The voltage control issue of the distribution system is modeled as the Markov Decision Process, while each grid-connected interface inverter of renewables is modeled as a deep neural network (DNN) based agent. With the designed reward function, the agents will interact with and seek for the optimal coordinated voltage-var control strategy. The offline-trained agents will execute online in a decentralized way to guarantee the voltage stability of the distribution without any extra communication. The proposed method can effectively achieve a communication-free and accurate voltage-var control of the distribution system under the uncertainties of renewables. The case study based on IEEE 33-bus system is demonstrated to validate the method.

-

Optimal convergence rates of totally asynchronous optimization

2022. Xuyang Wu (et al.). 2022 IEEE 61st Conference on Decision and Control (CDC), Cancun, Mexico, 2022, 6484-6490

KonferensLäs mer om Optimal convergence rates of totally asynchronous optimizationAsynchronous optimization algorithms are at the core of modern machine learning and resource allocation systems. However, most convergence results consider bounded information delays and several important algorithms lack guarantees when they operate under total asynchrony. In this paper, we derive explicit convergence rates for the proximal incremental aggregated gradient (PIAG) and the asynchronous block-coordinate descent (Async-BCD) methods under a specific model of total asynchrony, and show that the derived rates are order-optimal. The convergence bounds provide an insightful understanding of how the growth rate of the delays deteriorates the convergence times of the algorithms. Our theoretical findings are demonstrated by a numerical example.

-

Policy Evaluation with Delayed, Aggregated Anonymous Feedback

2022. Guilherme Dinis Chaliane Junior, Sindri Magnússon, Jaakko Hollmén. Discovery Science, 114-123

KonferensLäs mer om Policy Evaluation with Delayed, Aggregated Anonymous FeedbackIn reinforcement learning, an agent makes decisions to maximize rewards in an environment. Rewards are an integral part of the reinforcement learning as they guide the agent towards its learning objective. However, having consistent rewards can be infeasible in certain scenarios, due to either cost, the nature of the problem or other constraints. In this paper, we investigate the problem of delayed, aggregated, and anonymous rewards. We propose and analyze two strategies for conducting policy evaluation under cumulative periodic rewards, and study them by making use of simulation environments. Our findings indicate that both strategies can achieve similar sample efficiency as when we have consistent rewards.

-

A New Family of Feasible Methods for Distributed Resource Allocation

2021. Xuyang Wu, Sindri Magnússon, Mikael Johanson. 2021 60th IEEE Conference on Decision and Control (CDC)

KonferensLäs mer om A New Family of Feasible Methods for Distributed Resource AllocationDistributed resource allocation is a central task in network systems such as smart grids, water distribution networks, and urban transportation systems. When solving such problems in practice it is often important to have non-asymptotic feasibility guarantees for the iterates, since overall-location of resources easily causes systems to break down. In this paper, we develop a distributed resource reallocation algorithm where every iteration produces a feasible allocation. The algorithm is fully distributed in the sense that nodes communicate only with neighbors over a given communication network. We prove that under mild conditions the algorithm converges to a point arbitrarily close to the optimal resource allocation. Numerical experiments demonstrate the competitive practical performance of the algorithm.

-

A flexible framework for communication-efficient machine learning

2021. Sarit Khirirat (et al.). Proceedings of the AAAI Conference on Artificial Intelligence 35 (9), 8101-8109

ArtikelLäs mer om A flexible framework for communication-efficient machine learningWith the increasing scale of machine learning tasks, it has become essential to reduce the communication between computing nodes. Early work on gradient compression focused on the bottleneck between CPUs and GPUs, but communication-efficiency is now needed in a variety of different system architectures, from high-performance clusters to energy-constrained IoT devices. In the current practice, compression levels are typically chosen before training and settings that work well for one task may be vastly suboptimal for another dataset on another architecture. In this paper, we propose a flexible framework which adapts the compression level to the true gradient at each iteration, maximizing the improvement in the objective function that is achieved per communicated bit. Our framework is easy to adapt from one technology to the next by modeling how the communication cost depends on the compression level for the specific technology. Theoretical results and practical experiments indicate that the automatic tuning strategies significantly increase communication efficiency on several state-of-the-art compression schemes.

-

Compressed Gradient Methods With Hessian-Aided Error Compensation

2021. Sarit Khirirat, Sindri Magnússon, Mikael Johansson. IEEE Transactions on Signal Processing 69, 998-1011

ArtikelLäs mer om Compressed Gradient Methods With Hessian-Aided Error CompensationThe emergence of big data has caused a dramatic shift in the operating regime for optimization algorithms. The performance bottleneck, which used to be computations, is now often communications. Several gradient compression techniques have been proposed to reduce the communication load at the price of a loss in solution accuracy. Recently, it has been shown how compression errors can be compensated for in the optimization algorithm to improve the solution accuracy. Even though convergence guarantees for error-compensated algorithms have been established, there is very limited theoretical support for quantifying the observed improvements in solution accuracy. In this paper, we show that Hessian-aided error compensation, unlike other existing schemes, avoids accumulation of compression errors on quadratic problems. We also present strong convergence guarantees of Hessian-based error compensation for stochastic gradient descent. Our numerical experiments highlight the benefits of Hessian-based error compensation, and demonstrate that similar convergence improvements are attained when only a diagonal Hessian approximation is used.

-

Distributed Newton Method Over Graphs: Can Sharing of Second-Order Information Eliminate the Condition Number Dependence?

2021. Erik Berglund, Sindri Magnússon, Mikael Johansson. IEEE Signal Processing Letters 28, 1180-1184

ArtikelLäs mer om Distributed Newton Method Over GraphsOne of the main advantages of second-order methods in a centralized setting is that they are insensitive to the condition number of the objective function's Hessian. For applications such as regression analysis, this means that less pre-processing of the data is required for the algorithm to work well, as the ill-conditioning caused by highly correlated variables will not be as problematic. Similar condition number independence has not yet been established for distributed methods. In this paper, we analyze the performance of a simple distributed second-order algorithm on quadratic problems and show that its convergence depends only logarithmically on the condition number. Our empirical results indicate that the use of second-order information can yield large efficiency improvements over first-order methods, both in terms of iterations and communications, when the condition number is of the same order of magnitude as the problem dimension.

-

Improved Step-Size Schedules for Noisy Gradient Methods

2021. Sarit Khirirat (et al.). ICASSP 2021 - 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 3655-3659

KonferensLäs mer om Improved Step-Size Schedules for Noisy Gradient MethodsNoise is inherited in many optimization methods such as stochastic gradient methods, zeroth-order methods and compressed gradient methods. For such methods to converge toward a global optimum, it is intuitive to use large step-sizes in the initial iterations when the noise is typically small compared to the algorithm-steps, and reduce the step-sizes as the algorithm progresses. This intuition has been con- firmed in theory and practice for stochastic gradient methods,but similar results are lacking for other methods using approximate gradients. This paper shows that the diminishing step-size strategies can indeed be applied for a broad class of noisy gradient methods. Unlike previous works, our analysis framework shows that such step-size schedules enable these methods to enjoy an optimal O(1/k) rate. We exemplify our results on zeroth-order methods and stochastic compression methods. Our experiments validate fast convergence of these methods with the step decay schedules.

-

On the Convergence of Step Decay Step-Size for Stochastic Optimization

2021. Xiaoyu Wang, Sindri Magnússon, Mikael Johansson. Advances in Neural Information Processing Systems 34 (NeurIPS 2021), 14226-14238

KonferensLäs mer om On the Convergence of Step Decay Step-Size for Stochastic OptimizationThe convergence of stochastic gradient descent is highly dependent on the step-size, especially on non-convex problems such as neural network training. Step decay step-size schedules (constant and then cut) are widely used in practice because of their excellent convergence and generalization qualities, but their theoretical properties are not yet well understood. We provide the convergence results for step decay in the non-convex regime, ensuring that the gradient norm vanishes at an O(ln T /√T ) rate. We also provide the convergence guarantees for general (possibly non-smooth) convex problems, ensuring an O(ln T /√T ) convergence rate. Finally, in the strongly convex case, we establish an O(ln T /T ) rate for smooth problems, which we also prove to be tight, and an O(ln2 T /T ) rate without the smoothness assumption. We illustrate the practical efficiency of the step decay step-size in several large scale deep neural network training tasks.

-

Distributed Optimal Voltage Control With Asynchronous and Delayed Communication

2020. Sindri Magnússon, Guannan Qu, Na Li. IEEE Transactions on Smart Grid 11 (4), 3469-3482

ArtikelLäs mer om Distributed Optimal Voltage Control With Asynchronous and Delayed CommunicationThe increased penetration of volatile renewable energy into distribution networks necessities more efficient distributed voltage control. In this paper, we design distributed feedback control algorithms where each bus can inject both active and reactive power into the grid to regulate the voltages. The control law on each bus is only based on local voltage measurements and communication to its physical neighbors. Moreover, the buses can perform their updates asynchronously without receiving information from their neighbors for periods of time. The algorithm enforces hard upper and lower limits on the active and reactive powers at every iteration. We prove that the algorithm converges to the optimal feasible voltage profile, assuming linear power flows. This provable convergence is maintained under bounded communication delays and asynchronous communications. We further numerically test the performance of the algorithm using the full nonlinear AC power flow model. Our simulations show the effectiveness of our algorithm on realistic networks with both static and fluctuating loads, even in the presence of communication delays.

-

On Maintaining Linear Convergence of Distributed Learning and Optimization Under Limited Communication

2020. Sindri Magnússon, Hossein Shokri-Ghadikolaei, Na Li. IEEE Transactions on Signal Processing 68, 6101-6116

ArtikelLäs mer om On Maintaining Linear Convergence of Distributed Learning and Optimization Under Limited CommunicationIn distributed optimization and machine learning, multiple nodes coordinate to solve large problems. To do this, the nodes need to compress important algorithm information to bits so that it can be communicated over a digital channel. The communication time of these algorithms follows a complex interplay between a) the algorithm's convergence properties, b) the compression scheme, and c) the transmission rate offered by the digital channel. We explore these relationships for a general class of linearly convergent distributed algorithms. In particular, we illustrate how to design quantizers for these algorithms that compress the communicated information to a few bits while still preserving the linear convergence. Moreover, we characterize the communication time of these algorithms as a function of the available transmission rate. We illustrate our results on learning algorithms using different communication structures, such as decentralized algorithms where a single master coordinates information from many workers and fully distributed algorithms where only neighbours in a communication graph can communicate. We conclude that a co-design of machine learning and communication protocols are mandatory to flourish machine learning over networks.

Visa alla publikationer av Sindri Magnússon vid Stockholms universitet